|

||||||

|

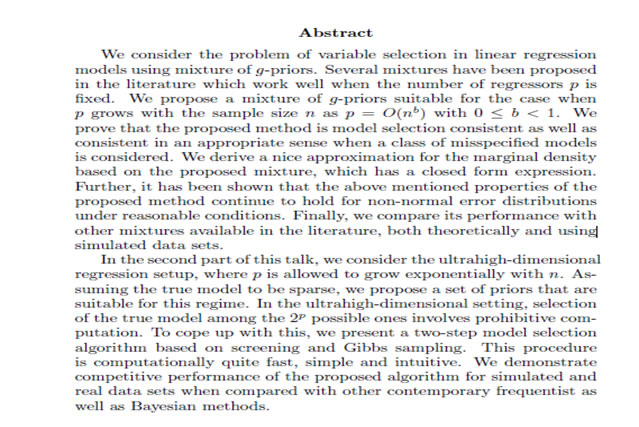

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract:

Abstract: Passive transport models are equations of advection-diffusion type. In most of the applications involving passive transport, the advective fields are of greater magnitude compared to molecular diffusion.

Our results have close links to

This talk will illustrate the theoretical results via various interesting examples. We address some well-known advective fields such as the Euclidean motions, the Taylor-Green cellular flows, the cat’s eye flows and some special class of the Arnold-Beltrami-Childress (ABC) flows. We will also comment on certain examples of hyperbolic or Anosov flows. Some of the results to be presented in this talk can be found in the following

Publication: T. Holding, H. Hutridurga, J. Rauch. Convergence along mean flows, SIAM J Math. Abstract: Abstract: We consider the problem of computationally-efficient prediction from high-dimensional and highly correlated predictors in challenging settings where accurate variable selection is effectively impossible. Direct application of penalization or Bayesian methods implemented with Markov chain Monte Carlo can be computationally daunting and unstable. Hence, some type of dimensionality reduction prior to statistical analysis is in order. Common solutions include application of screening algorithms to reduce the regressors, or dimension reduction using projections of the design matrix. The former approach can be highly sensitive to threshold choice in finite samples, while the later can have poor performance in very high-dimensional settings. We propose a Targeted Random Projection (TARP) approach that combines positive aspects of both the strategies to boost performance. In particular, we propose to use information from independent screening to order the inclusion probabilities of the features in the projection matrix used for dimension reduction, leading to data-informed sparsity. Theoretical results on the predictive accuracy of TARP is discussed in detail along with the rate of computational complexity. Simulated data examples, and real data applications are given to illustrate gains relative to a variety of competitors. Abstract: Abstract: Abstract: Henry-Parusinski proved that the Lipschitz right classification of function germs admits continuous moduli. This allows us to introduce the notion of Lipschitz simple germs and list all such germs. We will present the method of the classification in this talk and mention some open problems.

Abstract: In this talk, the likelihood construction is explained under different censoring schemes. Further, the techniques for estimation of the unknown parameters of the survival model are discussed under these censoring schemes.

Abstract: In this talk, the change point problem in hazard rate is considered. The Lindley hazard change point model is discussed with its application to model bone marrow transplant data. Further, a general hazard regression change point model is discussed with exponential and Weibull distribution as special cases.

Abstract: A {\em simplicial cell complex} K of dimension d is a poset isomorphic to the face poset of a d-dimensional simplicial CW-complex X. If a topological space M is homeomorphic to X, then K is said to be a {\em pseudotriangulation} of M. In 1974, Pezzana proved that every connected closed PL d-manifold admits a (d+1)-vertex pseudotriangulation. For such a pseudotriangulation of a PL d-manifold one can associate a (d+1)-regular colored graph, called a crystallization of the manifold. In dimension 2, I shall show a proof of the classification of closed surfaces using crystallization. This concept has some important higher dimensional analogs, especially in dimensions 3 and 4. In dimensions 3 and 4, I shall give lower bounds for facets in a pseudotriangulation of a PL manifolds. Also, I shall talk on the regular genus (a higher dimensional analog of genus) of PL d-manifolds. Then I shall show the importance of the regular genus in dimension 4. Additivity of regular genus has been proved for a huge class of PL 4-manifolds. We have some observations on the regular genus, which is related to the 4-dimensional Smooth Poincare Conjecture.

Abstract: Rayleigh-Bénard convection is a classical extended dissipative system which shows a plethora of bifurcations and patterns. In this talk, I'll present the results of our investigation on bifurcations and patterns near the onset of Rayleigh-Bénard convection of low-Prandtl number fluids. Investigation is done by performing direct numerical simulations (DNS) of the governing equations. Low dimensional modeling of the system using the DNS data is also done to understand the origin of different flow patterns. Our investigation reveals a rich variety of bifurcation structures involving pitchfork, Hopf, homoclinic and Neimar-Sacker bifurcations.

Abstract: In this talk, we will discuss some recent results on the existence and uniqueness of strong solutions of certain classes of stochastic PDEs in the space of Tempered distributions. We show that these solutions can be constructed from the solutions of "related" finite dimensional stochastic differential equations driven by the same Brownian motion. We will also discuss a criterion, called the Monotonicity inequality, which implies the uniqueness of strong solutions.

Abstract: The investigation of solute dispersion is most interesting topic of research owing to its outspread applications in various fields such as biomedical engineering, physiological fluid dynamics, etc. The aim of the present study is to know the different physiological processes involved in the solute dispersion in blood flow by assuming the relevant non-Newtonian fluid models. The axial solute dispersion process in steady/unsteady non-Newtonian fluid flow in a straight tube is analyzed in the presence and absence of absorption at the tube wall. The pulsatile nature of the blood is considered for unsteady flow. Owing to non-Newtonian nature of blood at low shear rate in small vessels, non-Newtonian Casson, Herschel-Bulkley, Carreau and Carreau-Yasuda fluid models which are most relevant for blood flow analysis are considered. The three transport coefficients i.e., exchange, convection and dispersion coefficients which describe the whole dispersion process in the system are determined. The mean concentration of solute is analyzed at all time. A comparative study of the solute dispersion is made among the Newtonian and non-Newtonian fluid models. Also, the comparison of solute dispersion between single- and two-phase models is made at all time for different radius of micro blood vessels.

Abstract: Quasilinear symmetric and symmetrizable hyperbolic system has a wide range of applications in engineering and physics including unsteady Euler and potential equations of gas dynamics, inviscid magnetohydrodynamic (MHD) equations, shallow water equations, non-Newtonian fluid dynamics, and Einstein field equations of general relativity. In the past, the Cauchy problem of smooth solutions for these systems has been studied by several mathematicians using semigroup approach and fixed point arguments. In a recent work of M. T. Mohan and S. S. Sritharan, the local solvability of symmetric hyperbolic system is established using two different methods, viz. local monotonicity method and a frequency truncation method. The local existence and uniqueness of solutions of symmetrizable hyperbolic system is also proved by them using a frequency truncation method. Later they established the local solvability of the stochastic quasilinear symmetric hyperbolic system perturbed by Levy noise using a stochastic generalization of the localized Minty-Browder technique. Under a smallness assumption on the initial data, a global solvability for the multiplicative noise case is also proved. The essence of this talk is to give an overview of these new local solvability methods and their applications.

Abstract: In this talk, we present the moduli problem of rank 2 torsion free Hitchin pairs of fixed Euler characteristic χ on a reducible nodal curve. We describe the moduli space of the Hitchin pairs. We define the analogue of the classical Hitchin map and describe the geometry of general Hitchin fibre. Time permits,talk on collaborated work with Balaji and Nagaraj on degeneration of moduli space of Hitchin pairs.

Abstract: The formalism of an ``abelian category'' is meant to axiomatize the operations of linear algebra. From there, the notion of ``derived category'' as the category of complexes ``upto quasi-isomorphisms'' is natural, motivated in part by topology. The formalism of t-structures allows one to construct new abelian categories which are quite useful in practice (giving rise to new cohomology theories like intersection cohomology, for example).

Abstract: Using equivariant obstruction theory we construct equivariant maps from certain classifying spaces to representation spheres for cyclic groups, product of elementary Abelian groups and dihedral groups.

Abstract:

Abstract: Quantile regression provides a more comprehensive relationship between a response and covariates of interest compared to mean regression. When the response is subject to censoring, estimating conditional mean requires strong distributional assumptions on the error whereas (most) conditional quantiles can be estimated distribution-free. Although conceptually appealing, quantile regression for censored data is challenging due to computational and theoretical difficulties arising from non-convexity of the objective function involved. We consider a working likelihood based on Powell's objective function and place appropriate priors on the regression parameters in a Bayesian framework. In spite of the non-convexity and misspecification issues, we show that the posterior distribution is strong selection consistent. We provide a “Skinny” Gibbs algorithm that can be used to sample the posterior distribution with complexity linear in the number of variables and provide empirical evidence demonstrating the fine performance of our approach.

Abstract:

Abstract: Given a closed smooth Riemannian manifold M, the Laplace operator is known to possess a discrete spectrum of eigenvalues going to infinity. We are interested in the properties of the nodal sets and nodal domains of corresponding eigen functions in the high energy (semiclassical) limit. We focus on some recent results on the size of nodal domains and tubular neighbourhoods of nodal sets of such high energy eigenfunctions (joint work with Bogdan Georgiev).

Abstract: I will define affine Kac-Moody algebras, toroidal Lie algebras and full toroidal Lie algebras twisted by several finite order automorphisms and classify integrable representations of twisted full toroidal Lie algebras.

Abstract: In recent years, one major focus of modeling spatial data has been to connect two contrasting approaches, namely, the Markov random field approach and the geostatistical approach. While the geostatistical approach allows flexible modeling of the spatial processes and can accommodate continuum spatial variation, it faces formidable computational burden for large spatial data. On the other hand, spatial Markov random fields facilitate fast statistical computations but they lack in flexibly accommodating continuum spatial variations. In this talk, I will discuss novel statistical models and methods which allow us to accommodate continuum spatial variation as well as fast matrix-free statistical computations for large spatial data. I will discuss an h-likelihood method for REML estimation and I will show that the standard errors of these estimates attain their Rao-Cramer lower bound and thus are statistically efficient. I will discuss applications on ground-water Arsenic contamination and chlorophyll concentration in ocean. This is a joint work with Debashis Mondal at Oregon State University

Abstract: It is a well-known result from Hermann Weyl that if alpha is an irrational number in [0,1) then the number of visits of successive multiples of alpha modulo one in an interval contained in [0,1) is proportional to the size of the interval. In this talk we will revisit this problem, now looking at finer joint asymptotics of visits to several intervals with rational end points. We observe that the visit distribution can be modelled using random affine transformations; in the case when the irrational is quadratic we obtain a central limit theorem as well. Not much background in probability will be assumed. This is in joint work with Jon Aaronson and Michael Bromberg.

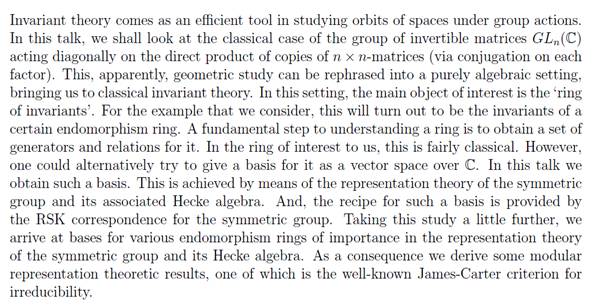

Abstract: The action of Gl_n(F_q) on the polynomial ring over n variables has been studied extensively by Dickson and the invariant ring can be explicitly described. However, the action of the same group on the ring when we go modulo Frobenius powers is not completely solved. I'll talk about some interesting aspects of this modified version of the problem. More specifically, I'll discuss a conjecture by Lewis, Reiner and Stanton regarding the Hilbert series corresponding to this action and try to prove some special cases of this conjecture.

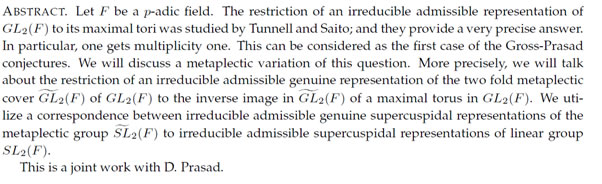

Abstract: One way to understand representations of a group is to ‘restrict’ the representation to its various subgroups, especially to those subgroups which give multiplicity one or finite multiplicity. We shall discuss a few examples of restriction for the representations of p-adic groups. Our main examples will be the pairs $(GL_2(F), E^*) and (GL_2(E), GL_2(F))$, where $E/F$ is a quadratic extension of $p$-adic fields. These examples can be considered as low-rank cases of the well known Gross-Prasad conjectures, where one considers various ‘restrictions’ simultaneously. Further, we consider a similar ‘restriction problem’ when the groups under consideration are certain central extensions of $F$-point of a linear algebraic groups by a finite cyclic group. These are topological central extensions and called ‘covering groups’ or ‘metaplectic groups’. These covering groups are not $F$-point of any linear algebraic group. We restrict ourselves to only a two fold covers of these groups and their ‘genuine’ representations. Covering groups naturally arise in the study of modular form of half-integral weight. Some results that we will discuss are outcome of a joint work with D. Prasad.

Abstract:

Abstract: It is a well-accepted practice in experimental situations to use auxiliary information to enhance the accuracy of the experiment i.e., to reduce the experimental error. In its simplest form of use of auxiliary information, data generated through an experiment are statistically modeled in terms of some assignable source(s) of variation, besides a chance cause of variation. The assignable causes comprise ‘treatment’ parameters and the ‘covariate’ parameter(s). This generates a family of ‘covariate models’ - serving as a ‘combination’ of ‘varietal design models’ and ‘regression models’. These are the well-known Analysis of Covariance

Abstract:

Abstract: Recent increase in the use of 3-D magnetic resonance images (MRI) and analysis of functional magnetic resonance images (fMRI) in medical diagnostics makes imaging, especially 3-D imaging very important. Observed images often contain noise which should be removed in such a way that important image features, e.g., edges, edge structures, and other image details should be preserved, so that subsequent image analyses are reliable. Direct generalizations of existing 2-D image denoising techniques to 3-D images cannot preserve complicated edge structures well, because, the edge structures in a 3-D edge surface can be much more complicated than the edge structures in a 2-D edge curve. Moreover, the amount of smoothing should be determined locally, depending on local image features and local signal to noise ratio, which is much more challenging in 3-D images due to large number of voxels. In this talk, I will talk about a multi-resolution and locally adaptive 3-D image denoising procedure based on local clustering of the voxels. I will provide a few numerical studies which show that the denoising method can work well in many real world applications. Finally, I will talk about a few future research directions along with some introductory research problems for interested students. Most parts of my talk should be accessible to the audience of diverse academic background.

Abstract: We shall discuss a new method of computing (integral) homotopy groups of certain manifolds in terms of the homotopy groups of spheres. The techniques used in this computation also yield formulae for homotopy groups of connected sums of sphere products and CW complexes of a similar type. In all the families of spaces considered here, we verify a conjecture of J. C.

Abstract: The theory of pseudo-differential operators provides a flexible tool for treating certain problems in linear partial differential equations. The Gohberg lemma on unit circle estimates the distance (in norm) from a given zero-order operator to the set of the compact operators from below in terms of the symbol. In this talk, I will introduce a version of the Gohberg lemma on compact Lie groups using the global calculus of pseudo-differential operators. Applying this, I will obtain the bounds for the essential spectrum and a criterion for an operator to be compact. The conditions used will be given in terms of the matrix-valued symbols of operators

Abstract: Let $F$ be a $p$-adic field. The restriction of an irreducible admissible representation of $GL_{2}(F)$ to its maximal tori was studied by Tunnell and Saito; and they provide a very precise answer. In particular, one gets multiplicity one. This can be considered as the first case of the Gross-Prasad conjectures. We will discuss a metaplectic variation of this question. More precisely, we will talk about the restriction of an irreducible admissible genuine representation of the two fold metaplectic cover $\widetilde{GL}_2(F)$ of $GL_2(F)$ to the inverse image in $\widetilde{GL}_2(F)$ of a maximal torus in $GL_2(F)$. We utilize a correspondence between irreducible admissible genuine supercuspidal representations of the metaplectic group widetilde{SL}_2(F)$ to irreducible admissible supercuspidal representations of linear group $SL_2(F)$. This is a joint work with D. Prasad.

Abstract: We will describe the problem of mod p reduction of p-adic Galois representations. For two dimensional crystalline representations of the local Galois group Gal(Q¯ p |Qp ), the reduction can be computed using the compatibility of p-adic and mod p Local Langlands Correspondences; this method was first introduced by Christophe Breuil in 2003. After giving a brief sketch of the history of the problem, we will discuss how the reductions behave for representations with slopes in the half- open interval [1, 2). In the relevant cases of reducible reduction, one may also ask if the reduction is peu or tr`es ramifi´ee. We will try to sketch an answer to this question, if time permits. (Joint works with Eknath Ghate, and also with Sandra Rozensztajn for slope 1.)

Abstract:

Abstract: Let M be a compact manifold without boundary. Define a smooth real valued function of the space of Riemannian metrics of M by taking Lp-norm of Riemannian curvature for p >= 2. Compact irreducible locally symmetric spaces are critical metrics for this functional. I will prove that rank 1 symmetric spaces are local minima for this functional by studying stability of the functional at those metrics. I will also show examples of irreducible symmetric metrics which are not local minima for it.

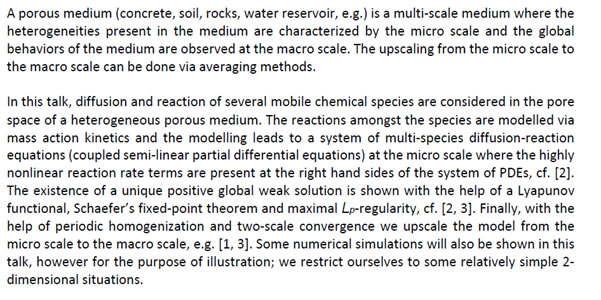

Abstract: Unfolding operators have been introduced and used to study homogenization problems. Initially, it was introduced for problems with rapidly oscillating coefficients and porous domains. Later, this has been developed for domains with oscillating boundaries, typically with rectangular or pillar type boundaries which are classified as non-smooth.

Abstract: We describe three approaches to the classical p-completion(or localization) of a topological space: as spaces, through cosimplicial space resolutions, and through mapping algebras – and show how they are related through appropriate "universal" systems of higher cohomology operations. All terms involved will be explained in the talk.

Abstract: We deal with the following eigenvalue optimization problem: Given a bounded open disk $B$ in a plane, how to place an obstacle $P$ of fixed shape and size within $B$ so as to maximize or minimize the fundamental eigenvalue $lambda_1$ of the Dirichlet Laplacian on $B setmunus P$. This means that we want to extremize the function $rho \rightarrow lambda_1(B setminus rho(P))$, where $rho$ runs over the set of rigid motions such that $rho(P) subset B$. We answer this problem in the case where $P$ is invariant under the action of a dihedral group $D_{2n}$, and where the distance from the center of the obstacle $P$ to the boundary is monotonous as a function of the argument between two axes of symmetry. The extremal configurations correspond to the cases where the axes of symmetry of $P$ coincide with a diameter of $B$. The maximizing and the minimizing configurations are identified.

Abstract: We deal with the following eigenvalue optimization problem: Given a bounded open disk B in a plane, how to place an obstacle P of fixed shape and size within B so as to maximize or minimize the fundamental eigenvalue

Abstract: Matched case-control studies are popular designs used in epidemiology for assessing the effects of exposures on binary traits. Modern investigations increasingly enjoy the ability to examine a large number of exposures in a comprehensive manner. However, risk factors often tend to be related in a non-trivial way, undermining efforts to identify true important ones using standard analytic methods. Epidemiologists often use data reduction techniques by grouping the prognostic factors using a thematic approach, with themes deriving from biological considerations. However, it is important to account for potential misspecification of the themes to avoid false positive findings. To this end, we propose shrinkage type estimators based on Bayesian penalization methods. Extensive simulation is reported that compares the Bayesian and Frequentist estimates under various scenarios. The methodology is illustrated using data from a matched case-control study investigating the role of polychlorinated biphenyls in understanding the etiology of non-Hodgkin's lymphoma.

Abstract: Cowen and Douglas have shown that the curvature is a complete invariant for a certain class of operators. Several ramifications of this result will be discussed.

Abstract: It is well known that the center of U(glN) is _nitely generated as an algebra. Gelfand de_ned central elements (Gelfand invariants) Tk for every positive integer k. It is known that the _rst N generates the center. The decomposition of tensor product modules for a reductive Lie algebra is a classical problem. It is known that each Gelfand invariant acts as a scaler on an irreducible submodule of a tensor product module. In this talk, for each k we de_ne several operators which commute with glN action but does not act as a scalar. This means these operators take one highest weight vector to another highest weight vector. Thus it is a practical algorithm to produce more highest weight vectors once we known one of them. Further the sum of these operators is Tk. If time permits we will de_ne some of these operators in the generality of Kac-Moody Lie algebras.

Abstract: Recent increase in the use of 3-D magnetic resonance images

Abstract: The theory of pseudo-di_erential operators has provided a very powerful and exible tool for treating certain problems in linear partial differential equations. The importance of the Heisenberg group in general harmonic analysis and problems involving partial di_erential operators on manifolds is well established. In this talk, I will introduce the pseudo-di_erential operators with operator-valued symbols on the Heisenberg group. I will give the necessary and su_cient conditions on the symbols for which these operators are in the Hilbert-Schmidt class. I will identify these HilbertSchmidt operators with the Weyl transforms with symbols in L2(R2n+1 _ R2n+1). I will also provide a characterization of trace class pseudo-di_erential operators on the Heisenberg group. A trace formula for these trace class operators would be presented.

Abstract: A finite separable extension E of a field F is called primitive if there are no intermediate extensions. It is called a solvable extension if the group of automorphisms of its galoisian closure over F is a solvable group. We show that a solvable primitive extension E of F is uniquely determined (up to F-isomorphism) by its galoisian closure and characterise the extensions D of F which are the galoisian closures of some solvable primitive extension E of F.

Abstract: The genesis of “zero” as a number, that even a child so casually uses today, is a long and involved one. A great many persons concerned with the history of its evolution, today accepts that the number “zero”, in its true potential, as we use it in our present day mathematics, has its root, conceptually as well as etymologically, in the word ‘´S¯unya’ of the Indian antiquity. It was introduced in India by the Hindu mathematicians, which eventually became a numeral for mathematical expression for “nothing”, and via the Arabs, went to Europe, where it survived a prolonged battle with the Church (which once banned the use of ‘zero’ !) through centuries. However, the time frame of its origin in Indian antiquity is still hotly debated. Furthermore, some recent works even try to suggest that a trace of the concept, if not in total operational perspective, might have a Greek origin that traveled to India during the Greek invasion of the northern part of the country. However, from the works on Vedic prosody by Pi ˙ngala (Pi ˙ngalacchandah. s¯utra) [3rd Century BC] to the concept of “lopa” in the grammarian Panini, (As. t.¯adhy¯ay¯ı) (400-700 BC, by some modern estimates) it appears very likely that the thread of rich philosophical and socio academic ambiances of Indian antiquity was quite pregnant with the immensity of the concept of ‘´S¯unya’ - a dichotomy as well as a simultaneity between nothing and everything, the ‘zero’ of void and that of an all pervading ‘fathomless’ infinite. A wide variety of number systems were used across various ancient civilizations, like the Inca, Egyptian, Mayan, Babylonian, Greek, Roman, Chinese, Arab, Indian etc. Some of them even had ‘some sort of zero’ in their system! Why then, only the Indian zero is generally accepted as the ancestor of our modern mathematical zero? Why is it only as late as in 1491, that one may find the first ever mention of ‘zero’ in a book from Europe? In this popular level lecture, meant for a general audience, based on the mindboggling natural history of ‘zero’, we would like to discuss, through numerous slides and pictures, the available references to the evolution and struggle of the concept of place-value based enumeration system along with a “zero” in it, in its broader social and philosophical contexts.

Abstract: Logistic regression is an important and widely used regression model for binary responses and is extensively used in many applied fields.

Abstract: High dimension, low sample size data pose great challenges for the existing statistical methods. For instance, several popular methods for cluster analysis based on the Euclidean distance often fail to yield satisfactory results for high dimensional data. In this talk, we will discuss a new measure of dissimilarity, called MADD and see how it can be used to achieve perfect clustering in high dimensions. Another important problem in cluster analysis is to find the number of clusters in a data set. We will see that many existing methods for this problem can be modified using MADD to achieve superior performance. A new method for estimating the number of clusters will also be discussed. We will present some theoretical and numerical results in this connection.

Abstract: Most of todays experimentally verifiable scientific research, not only requires us to resolve the physical features over several spatial and temporal scales but also demand suitable techniques to bridge the information over these scales. In this talk I will provide two examples in mathematical biology to describe these systems at two levels: the micro level and the macro (continuum) level. I will then detail suitable tools in homogenization theory to link these different scales.

Abstract: In this talk, I shall consider the high-dimensional moving average (MA) and autoregressive (AR) processes. My goal will be to explore the asymptotics for eigenvalues of the sample autocovariance matrices. This asymptotics will help in the estimation of unknown order of the high-dimensional MA and AR processes. Our results will also provide tests of different hypotheses on coefficient matrices. This talk will be based on joint works with Prof. Arup

Abstract: McKay correspondence relates orbifold cohomology with the cohomology of a crepant resolution. This is a phenomenon in algebraic geometry. It was proved for toric orbifolds by Batyrev and Dais in the nineties. In this talk we present a similar correspondence for omnioriented quasitoric orbifolds. The interesting feature is how we deal with the absence of an algebraic or analytic structure. In a suitable sense, our correspondence is a generalization of the algebraic one.

Abstract: We show that if a modular cuspidal eigenform f of weight 2k is 2-adically close to an elliptic curve E over the field of rational numbers Q, which has a cyclic rational 4-isogeny, then the n-th Fourier coefficient of f is non-zero in the short interval (X, X + cX^{1/4}) for all X >> 0 and for some c > 0. We use this fact to produce non-CM cuspidal eigenforms f of level N>1 and weight k > 2 such that i_f(n) << n^{1/4} for all n >> 0$.

Abstract: Given an irreducible polynomial f(x) with integer coefficients and a prime number p, one wishes to determine whether f(x) is a product of distinct linear factors modulo p. When f(x) is a solvable polynomial, this question is satisfactorily answered by the Class Field Theory. Attempts to find a non-abelian Class Field Theory lead to the development of an area of mathematics called the Langlands program.

Abstract: Affine Kac-Moody algebras are infinite dimensional analogs of semi-simple Lie algebras and have a central role both in Mathematics and Mathematical Physics. Representation theory of these algebras has grown tremendously since their independent introduction by R.V. Moody and V.G.

Abstract: Given an irreducible polynomial f(x) with integer coefficients and a prime number p, one wishes to determine whether f(x) is a product of distinct linear factors modulo p. When f(x) is a solvable polynomial, this question is satisfactorily answered by the Class Field Theory. Attempts to find a non-abelian Class Field Theory lead to the development of an area of mathematics called the Langlands program.

Abstract: The aim of this lecture is to consider a singularly perturbed semi-linear elliptic problem with power non-linearity in Annular Domains of R^{2n} and show the existence of two orthogonal S^{n−1} concentrating solutions. We will discuss some issues involved in the proof in the context of S^1 concentrating solutions of similar nature.

Abstract: Let E1 and E2 be elliptic curves defined over the field of rational numbers with good and ordinary reduction at an odd prime p, and have irreducible, equivalent mod p Galois representations. In this talk, we shall discuss the variation in the parity of ranks of E1 and E2 over certain number fields.

Abstract: The curvature of a contraction T in the Cowen-Douglas class is bounded above by the curvature of the backward shift operator. However, in general, an operator satisfying the curvature inequality need not be contractive. In this talk we characterize a slightly smaller class of contractions using a stronger form of the curvature inequality. Along the way, we find conditions on the metric of the holomorphic Hermitian vector bundle E corresponding to the operator T in the Cowen-Douglas class which ensures negative definiteness of the curvature function. We obtain a generalization for commuting tuples of operators in the Cowen-Douglas class.

Abstract: In this talk we will introduce the theory of p-adic families of modular forms and more generally p-adic family of automorphic forms. Notion of p-adic family of modular forms was introduced by Serre and later it was generalized in various directions by the work of Hida, Coleman-Mazur, Buzzard and various other mathematicians. Study of p-adic families play a crucial role in modern number theory and in recent years many classical long standing problems in number theory has been solved using p-adic families. I'll state some of the problems in p-adic families of automorphic forms that I worked on in the past and plan to work on in the future.

Abstract: Fractal Interpolation Function (FIF) - a notion introduced by Michael Barnsley - forms a basis of a constructive approximation theory for non-differentiable functions. In view of their diverse applications, there has been steadily increasing interest in the particular flavors of FIF such as Ḧolder continuity, convergence, stability, and differentiability. Apart from these properties, a good interpolant/approximant should reflect geometrical shape properties that are described mathematically in terms of positivity, monotonicity, and convexity. These properties act as constraints on the approximation problem.

Abstract: A Subordinated stochastic process X(T(t)) is obtained by time-changing a process X(t) with a positive non-decreasing stochastic process T(t). The process X(T(t)) is said to be subordinated to the driving process X(t) and the process T(t) is called the directing process.

Abstract: Many physical phenomena can be modeled using partial differential equations. In this talk, applications of PDEs, in particular hyperbolic conservation laws will be shown to granular matter theory and crowd dynamics.

Abstract: Moduli of vector bundles on a curve was constructed and studied by Mumford, Seshadri and many others. Simpson simplified and gave general construction of moduli of pure sheaves on higher dimensional projective varieties in characteristic zero. Langer extended it to the positive characteristics. Alvarez-Consul and King gave another construction by using moduli of representations of Kronecker quiver.

Abstract: Homogenization is a branch of science where we try to understand microscopic structures via a macroscopic medium. Hence, it has applications in various branches of science and engineering. This study is basically developed from material science in the creation of composite materials though the contemporary applications are much far and wide. It is a process of understanding the microscopic behavior of an in-homogeneous medium via a homogenized medium. Mathematically, it is a kind of asymptotic analysis.

Abstract: In this talk we will discuss certain aspects of vector bundles over complex projective spaces and projective hypersurfaces. Our focus will be to find conditions under which a vector bundle can be written as a direct sum of smaller rank bundles or when it can be extended to a larger space.

Abstract: Enumerative geometry is a branch of mathematics that deals with the following question: "How many geometric objects are there that satisfy certain constraints?" The simplest example of such a question is "How many lines pass through two points?". A more interesting question is "How many lines are there in three dimensional space that intersect four generic lines?". An extremely important class of enumerative question is to ask "How many rational (genus 0) degree d curves are there in CP^2 that pass through 3d-1 generic points?" Although this question was investigated in the nineteenth century, a complete solution to this problem was unknown until the early 90's, when Kontsevich-Manin and Ruan-Tian announced a formula. In this talk we will discuss some natural generalizations of the above question; in particular we will be looking at rational curves on del-Pezzo surfaces that have a cuspidal singularity. We will describe a topological method to approach such questions. If time permits, we will also explain the idea of how to enumerate genus g curves with a fixed complex structure by comparing it with the Symplectic Invariant of a manifold (which are essentially the number of curves that are solutions to the perturbed d-bar equation) .

Abstract: For Gaussian process models, likelihood based methods are often difficult to use with large irregularly spaced spatial datasets due to the prohibitive computational burden and substantial storage requirements. Although various approximation methods have been developed to address the computational difficulties, retaining the statistical efficiency remains an issue. This talk focuses on statistical methods for approximating likelihoods and score equations. The proposed new unbiased estimating equations are both computationally and statistically efficient, where the covariance matrix inverse is approximated by a sparse inverse Cholesky approach. A unified framework based on composite likelihood methods is also introduced, which allows for constructing different types of hierarchical low rank approximations. The performance of the proposed methods is investigated by numerical and simulation studies, and parallel computing techniques are explored for very large datasets. Our methods are applied to nearly 90,000 satellite-based measurements of water vapor levels over a region in the Southeast Pacific Ocean, and nearly 1 million numerical model generated soil moisture data in the area of Mississippi River basin. The fitted models facilitate a better understanding of the spatial variability of the climate variables. About the Speaker: Ying Sun is an Assistant Professor of Statistics in the Division of Computer, Electrical and Mathematical Sciences and Engineering (CEMSE) at KAUST. She joined KAUST after one-year service as an Assistant Professor in the Department of Statistics at the Ohio State University (OSU). Before joining OSU, she was a postdoctorate researcher at the University of Chicago in the research network for Statistical Methods for Atmospheric and Oceanic Sciences (STATMOS), and at the Statistical and Applied Mathematical Sciences Institute (SAMSI) in the Uncertainty Quantification program.

Abstract: In this talk, the LAD estimation procedure and related issues will be discussed in the non-parametric convex regression problem. In addition, based on the concordance and the discordance of the observations, a test will be proposed to check whether the unknown non-parametric regression function is convex or not. Some preliminary ideas to formulate the test statistics of the test along with their properties will also be investigated.

Abstract: The general philosophy of Langlands' functoriality predicts that given two groups H and G, if there exists a 'nice' map between the respective L-groups of H and G then using the map we can transfer automorphic representations of H to that of G. Few examples of such transfers are Jacquet-Langlands' transfer, endoscopic transfer and base change. On the other hand, by the work of Serre, Hida, Coleman, Mazur and many other mathematicians, we can now construct p-adic families of automorphic forms for various groups. In this talk, we will discuss some examples of Langlands' transfers which can be p-adically interpolated to give rise to maps between appropriate p-adic families of automorphic forms.

Abstract: Over the last few years, O-minimal structures have emerged as a nice framework for studying geometry and topology of singular spaces. They originated in model theory and provide an axiomatic approach of characterizing spaces with tame topology. In this talk, we will first briefly introduce the notion of O-minimal structures and present some of their main properties. Then, we will consider flat currents on pseudomanifolds that are definable in polynomially bounded o-minimal structures. Flat currents induce cohomology restrictions on the pseudomanifolds and we will show that this cohomology is related to their intersection cohomology.

Abstract: The Grothendieck ring, K0(M), of a model-theoretic structure M was defined by Krajicˇek and Scanlon as a generalization of the Grothendieck ring of varieties used in motivic integration. I will introduce this concept with some examples and then proceed to define the K-theory of M via a symmetric monoidal category. Prest conjectured that the Grothendieck ring of a non-zero right module, MR, is nontrivial when thought of as a structure in the language of right R-modules. The proof that such a Grothendieck ring is in fact a non-zero quotient of a monoid ring relies on techniques from simplicial homology, combinatorics, lattice theory as well as algebra. I will also discuss this result that settled Prest’s conjecture in the affirmative..

Abstract: We know how to multiply two real numbers or two complex numbers. In both cases it is bilinear and norm preserving. It is natural to ask which of the other R^n admits a such multiplication. We will discuss how this question is related to vector fields on sphere and the answer given by famous theorem of J. F. Adams.

Abstract: Euler system is a powerful machinery in Number theory to bound the size of Selmer groups. We start from introducing a brief history and we will explain the necessity of generalizing this machinery for the framework of deformations as well as the technical difficulty of commutative algebra which happens for such generalizations. If time permits, we talk about ongoing joint work on generalized Euler system with Shimomoto.

Abstract:

Abstract: TBA

Abstract: TBA

Abstract: TBA

Abstract: TBA

Abstract: Monodromy group of a hypergeometric differential equation is defined as image of the fundamental group G of Riemann sphere minus three points, namely 0, 1, and the point at infinity, under some certain representation of G inside the general linear group GL_n. By a theorem of Levelt, the monodromy groups are the subgroups of GL_n generated by the companion matrices of two monic polynomials f and g of degree n. If we start with f, g, two integer coefficient monic polynomials of degree n, which satisfy some "conditions" with f(0)=g(0)=1 (resp. f(0)=1, g(0)=-1), then the associated monodromy group preserves a non-degenerate integral symplectic form (resp. quadratic form), that is, the monodromy group is a subgroup of the integral symplectic group (resp. orthogonal group) of the associated symplectic form (resp. quadratic form). In this talk, we will describe a sufficient condition on a pair of the polynomials that the associated monodromy group is an arithmetic subgroup (a subgroup of finite index) of the integral symplectic group, and show some examples of arithmetic orthogonal monodromy groups.

Abstract: TBA

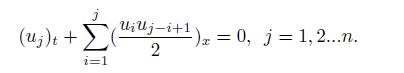

Abstract: Systems of Conservation laws which are not strictly hyperbolic appear in many physical applications. Generally for these systems the solution space is larger than the usual BVloc space and classical Glimm-Lax Theory does not apply. We start with the non-strictly hyperbolic system

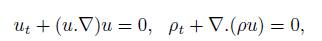

For n = 1, the above system is the celebrated Bugers equation which is well studied by E. Hopf. For n = 2, the above system describes one dimensional model for large scale structure formation of universe. We study (n = 4) case of the above system, using vanishing viscosity approach for Riemann type initial and boundary data and possible integral formulation, when the solution has nice structure. For certain class of general initial data we construct weak asymptotic solution developed by Panov and Shelkovich. As an application we study zero pressure gas dynamics system, namely,

where _ and u are density and velocity components respectively.

Abstract: I will recall basic definitions and facts of algebraic geometry and geometry of quadrics and then i will explain the relation of vector bundles and Hitchin map with these geometric facts.

+

-

Product of eigenforms on March 19, 2015 at 4:00 pm in FB567 by Dr. Jaban Meher

Click to collapse

Abstract: I will discuss about all the cases in which product of two eigenforms is again an eigenform. This talk is based on one of my works together with my recent work with Soumya Das.

Abstract: In this talk, we will discuss injectivity sets for the twisted spherical means on $\mathbb C^n.$ Specially, I will explain the following recent result. A complex cone is a set of injectivity for the twisted spherical means for the class of all continuous functions on $\mathbb C^n$ as long as it does not completely lay on the level surface of any bi-graded homogeneous harmonic polynomial on $\mathbb C^n.$

Abstract: In this talk we will survey recent developments in the analysis of partial differential equations arising out of image processing area with particular emphasis on a forward-backward regularization. We prove a series of existence, uniqueness and regularity results for viscosity, weak and dissipative solutions for general forward-backward diffusion flows.

Abstract:

Abstract: In this talk we will survey recent developments in the analysis of partial differential equations arising out of image processing area with particular emphasis on a forward-backward regularization. We prove a series of existence, uniqueness and regularity results for viscosity, weak and dissipative solutions for general forward-backward diffusion flows.

Abstract: Fuzzy logic is one of the many generalizations of Classical logic, where the truth values are allowed to lie in the entire unit interval [0, 1], as against just the set {0, 1}. Fuzzy implications are a generalization of classical mplication from two-valued logic to the multivalued setting. In this presentation, we will talk about a novel generative method called the

(i) a generating method of fuzzy implications, and (ii) a binary operation on the set I.

The rest of the talk will be a discussion of the

Secondly, looking at the

Abstract: Fuzzy logic is one of the many generalizations of Classical logic, where the truth values are allowed to lie in the entire unit interval [0, 1], as against just the set {0, 1}. Fuzzy implications are a generalization of classical implication from two-valued logic to the multivalued setting. In this presentation, we will talk about a novel generative method called the (i) a generating method of fuzzy implications, and (ii) a binary operation on the set The rest of the talk will be a discussion of the Secondly, looking at the

Abstract:

In the first part of the talk, we study infection spread in random geometric graphs where n nodes are distributed uniformly in the unit square W centred at the origin and two nodes are joined by an edge if the Euclidean distance between them is less than

In the second part of the talk, we discuss convergence rate of sums of locally determinable functionals of Poisson processes. Denoting the Poisson process as N, the functional as f and Lebesgue measure as

in terms of the decay rate of the radius of determinability.

About the speaker:

J. Michael Dunn is Oscar Ewing Professor Emeritus of Philosophy, Professor Emeritus of Computer Science and of Informatics, at the Indiana University-Bloomington. Dunn's research focuses on information based logics and relations between logic and computer science. He is particularly interested in so-called "sub-structural logics" including intuitionistic logic, relevance logic, linear logic, BCK-logic, and the Lambek Calculus. He has developed an algebraic approach to these and many other logics under the heading of "gaggle theory" (for generalized galois logics). He has done recent work on the relationship of quantum logic to quantum computation and on subjective probability in the context of incomplete and conflicting information. He has a general interest in cognitive science and the philosophy of mind. Abstract:

I will begin by discussing the history of quantum logic, dividing it into three eras or lives. The first life has to do with Birkhoff and von Neumann's algebraic approach in the 1930's. The second life has to do with the attempt to understand quantum logic as logic that began in the late 1950's and blossomed in the 1970's. And the third life has to do with recent developments in quantum logic coming from its connections to quantum computation. I shall review the structure and potential advantages of quantum computing and then discuss my own recent work with Lawrence Moss, Obias Hagge, and Zhenghan Wang connecting quantum logic to quantum computation by viewing quantum logic as the logic of quantum registers storing qubits, i.e., "quantum bits.". A qubit is a quantum bit, and unlike classical bits, the two values 0 and 1 are just two of infinitely many possible states of a qubit. Given sufficient time I will mention some earlier work of mine about mathematics based on quantum logic.

|

|

|||||

composition, of fuzzy implications that we have proposed. Denoting the set of all fuzzy implications defined on [0, 1], by I, the

composition, of fuzzy implications that we have proposed. Denoting the set of all fuzzy implications defined on [0, 1], by I, the  composition, of fuzzy implications that we have proposed. Denoting the set of all fuzzy implications defined on [0, 1], by

composition, of fuzzy implications that we have proposed. Denoting the set of all fuzzy implications defined on [0, 1], by  , the

, the  , contained in

, contained in  . Assuming edge passage times are exponentially distributed with unit mean, we obtain upper and lower bounds for speed of infection spread in the sub-connectivity regime,

. Assuming edge passage times are exponentially distributed with unit mean, we obtain upper and lower bounds for speed of infection spread in the sub-connectivity regime,

, we establish corresponding bounds for

, we establish corresponding bounds for